For everybody that has taken a basic algebra course, using a decimal point is such a fundamental and simple concept that it can seem to be a trivial application that transcends any type of discovery that would be significant concerning the history of mathematics. However, recent research argues that the implementation of decimals started earlier than the math community thought.

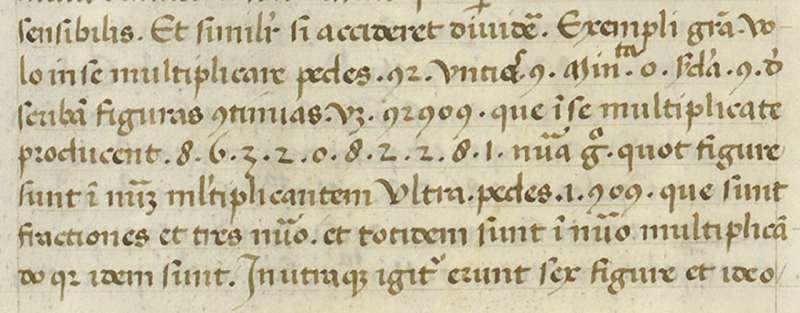

Bob Yirka in an article from phys.org states, “A mathematical historian at Trinity Wester University in Canada, has found use of a decimal point by a Venetian merchant 150 years before its first known use by German mathematician Christopher Clavius. In his paper puplished in the journal Historia Mathematica, Glen Van Brummelen describes how he found the evidence of decimal use in a volume called “Tabulae,” and its significance to the history of mathematics.”

Now, 150 years sounds like an insignificant amount of time considering the scope of the overall history of mathematics. However, you may be surprised to know that it was previously thought that decimals were only first used in Christopher Clavius’ work as he was creating astronomical tables in 1593. In other words, one of the most elementary properties of arithmetic we now know has been around for over 580 years as opposed to 430 years, which is very significant.

“The new discovery was made in a part of a manuscript written by Giovanni Bianchini in the 1440s—Van Brummelen was discussing a section of trigonometric tables with a colleague when he noticed some of the numbers included a dot in the middle. One example was 10.4, which Bianchini then multiplied by 8 in the same way as is done with modern mathematics. The finding shows that a decimal point to represent non-whole numbers occurred approximately 150 years earlier than previously thought by math historians.” (Yirka)

This enlightenment has lead to many other math historians discussing how applications of the decimal point were developed up until 1593, when it was used in Clavius’ research.